Setup

Environment

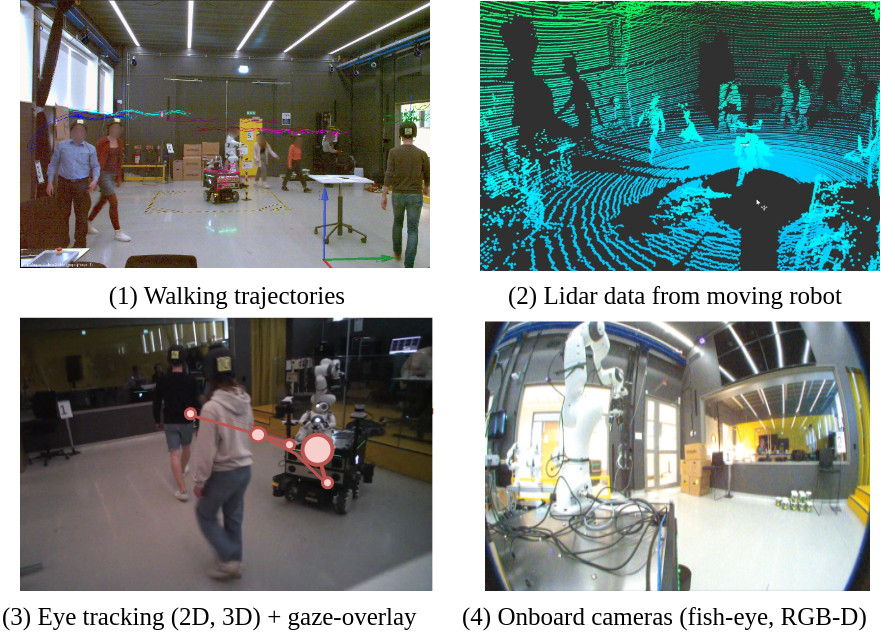

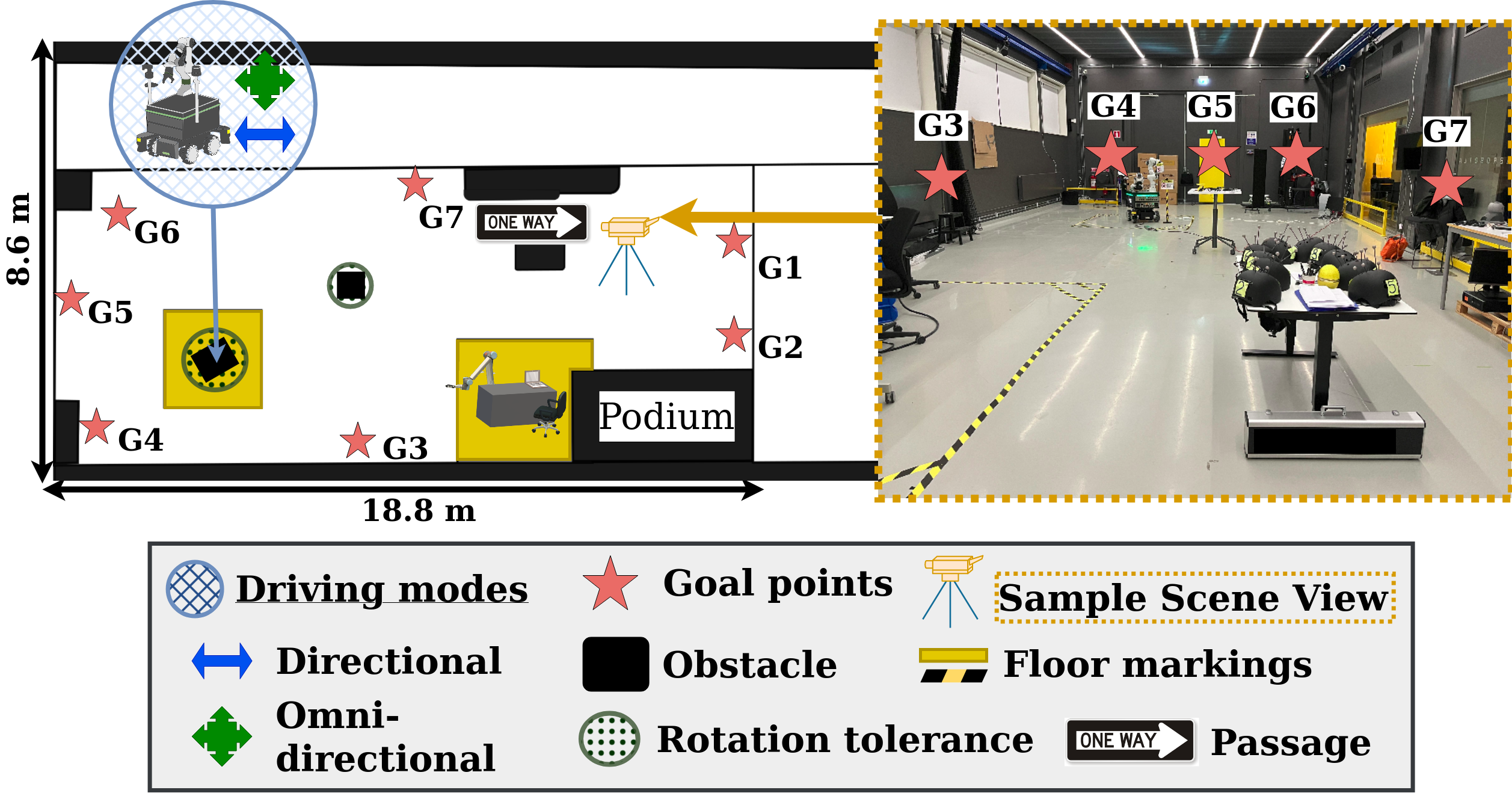

The dataset was recorded in a spacious laboratory room of 8.4x18.8 m. The laboratory room, where the motion capture system is installed, is mostly empty to allow for maneuvering of large groups, but also includes several constrained areas where obstacle avoidance and the choice of homotopy class is necessary. Goal positions are placed to force navigation along the room and generate frequent interactions in its center, while the placement of obstacles prevents walking between goals on a straight line.

Motion Capture system

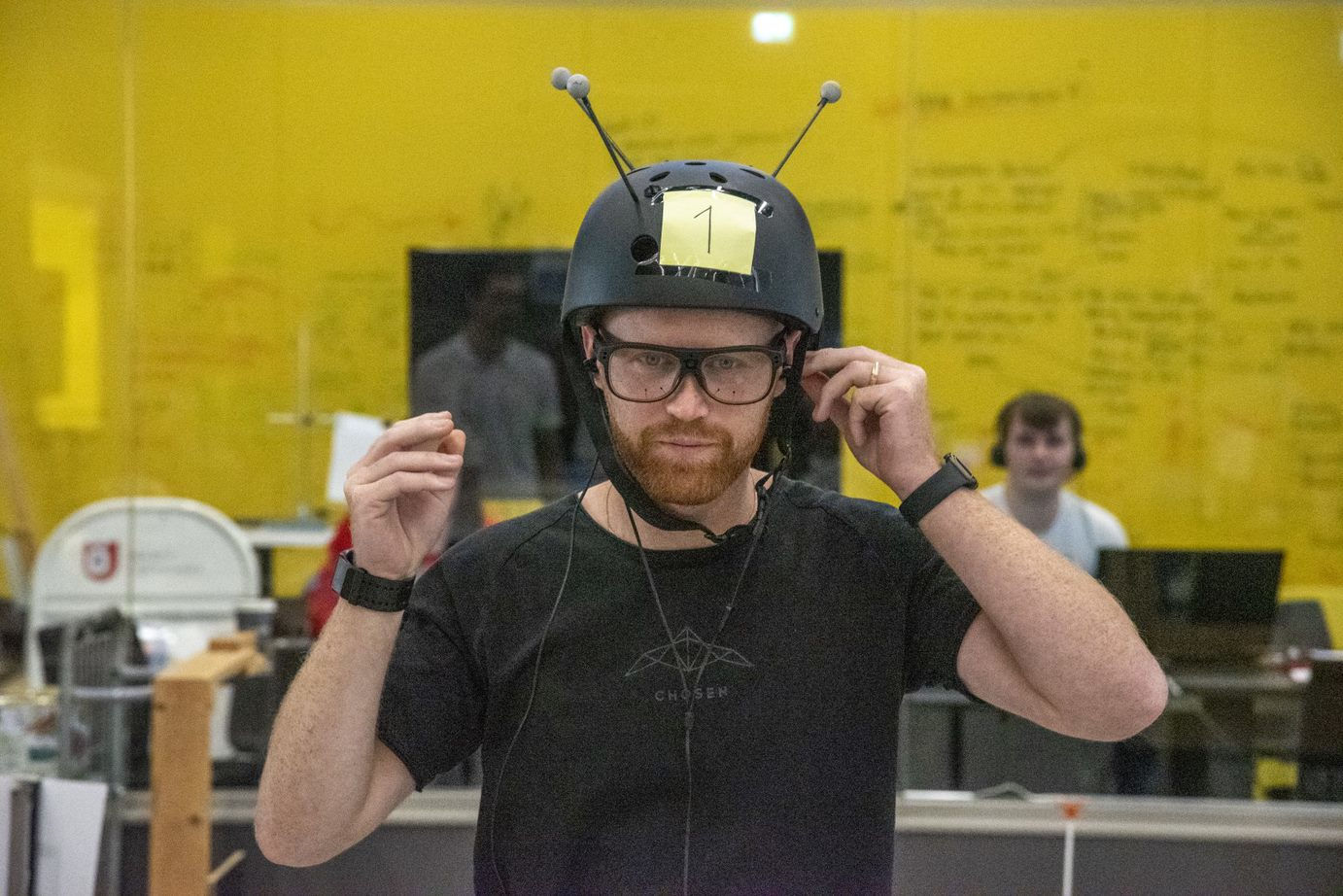

To track the motion of the agents we used the Qualisys Oqus 7+ motion capture system with 10 infrared cameras, mounted on the perimeter of the room. For people tracking, the reflective markers have been arranged in distinctive 3D patterns on the bicycle helmets. There are 10 helmets in this dataset, marked from 1 to 10.

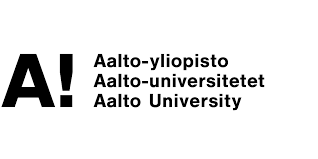

Eye tracking

For recording gaze directions, we used two Tobii Pro Glasses (Model 2 and 3) and a Pupil Core Invisble mobile tracking headset. The gaze sampling frequency of Tobii Pro Glasses and the Pupil Core Invisible is 50 Hz. All three headsets have a scene camera which records the video at 25 fps for the Tobii- and 30 Hz for Pupil Glasses. A gaze overlaid version of these videos is included in this dataset. Additionally we include the 2D- (For all devices) and 3D (For the Tobii Devices) eye tracking data aligned with the motion capture systems’ data.

Robot

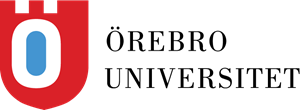

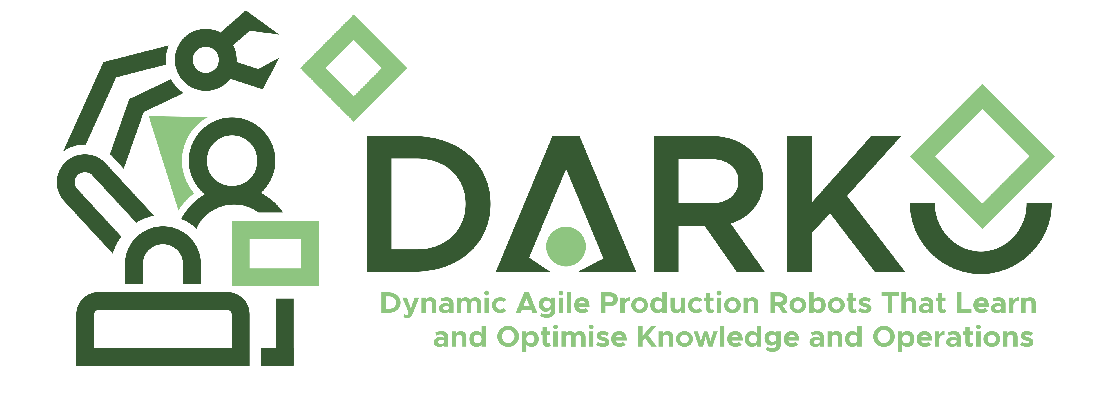

Our environment features several robotic arms as static obstacles and a mobile robot called DARKO. DARKO has a base with omnidirectional navigation capabilities. It is equipped with a robotic arm on top. The robot base is RB-Kairos+ and the arm is the Collaborative Robot Panda from Franka Emika. The robot base dimensions are 760×665×690 mm. The maximum reach height of the robot arm is 855 mm. The robot has one Ouster OS0-128 LiDAR, two Azure Kinect RGB-D cameras (one used in these recordings), two Basler fish-eye RGB cameras, and two Sick MicroScan 2D safety LiDARs. The Azure Kinect camera has a 75-degree horizontal field of view and a tracking range of up to 5 m. Finally, the robot is equipped with an “Anthropomorphic robot Mock Driver” (ARMoD). In scenarios 3,4 and 5, DARKO is a moving agent and plays an important role to study human-robot interaction settings.

Human roles description

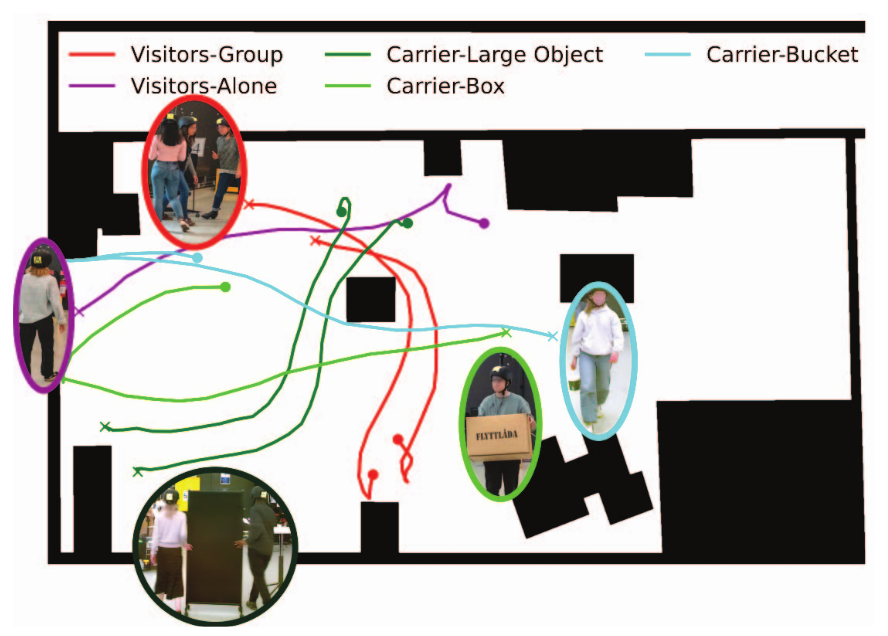

In order to collect motion data applicable to a variety of research areas, we designed several scenarios that promoted social interactions between individuals, groups of people, and robots. Within a segment of the recordings, participants engaged in activities (roles) specifically designed to emulate industrial tasks. These activities include both group and individual movements, as well as the transportation of various objects, including items such as boxes, buckets, and larger objects such as a poster stand. The goal is to motivate participants to move heterogeneously and to create meaningful, rich, and natural interactions given by the different human roles.