About

We present THÖR: a dataset of motion trajectories with diverse and accurate social human motion data in a shared indoor environment

Overview

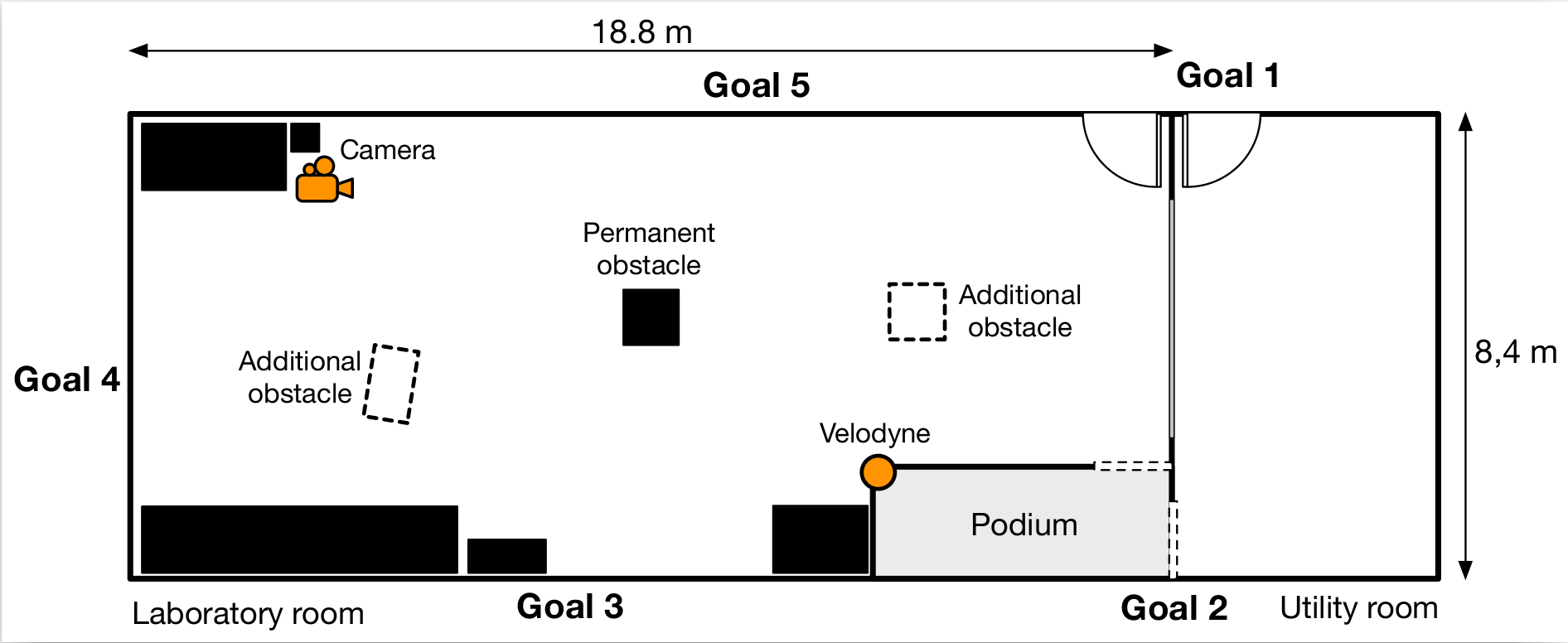

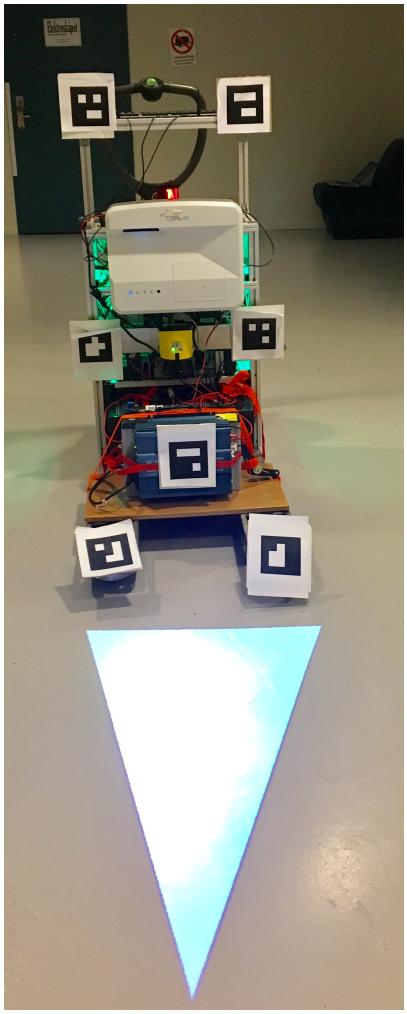

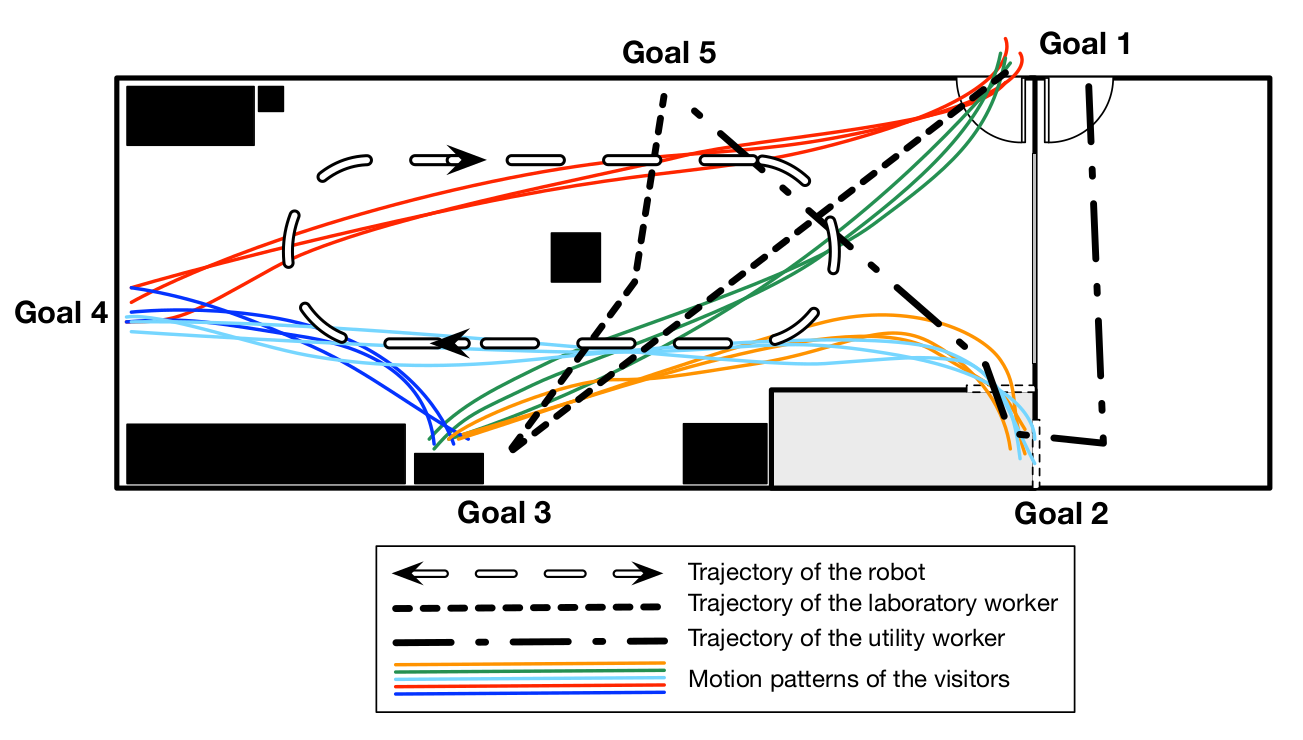

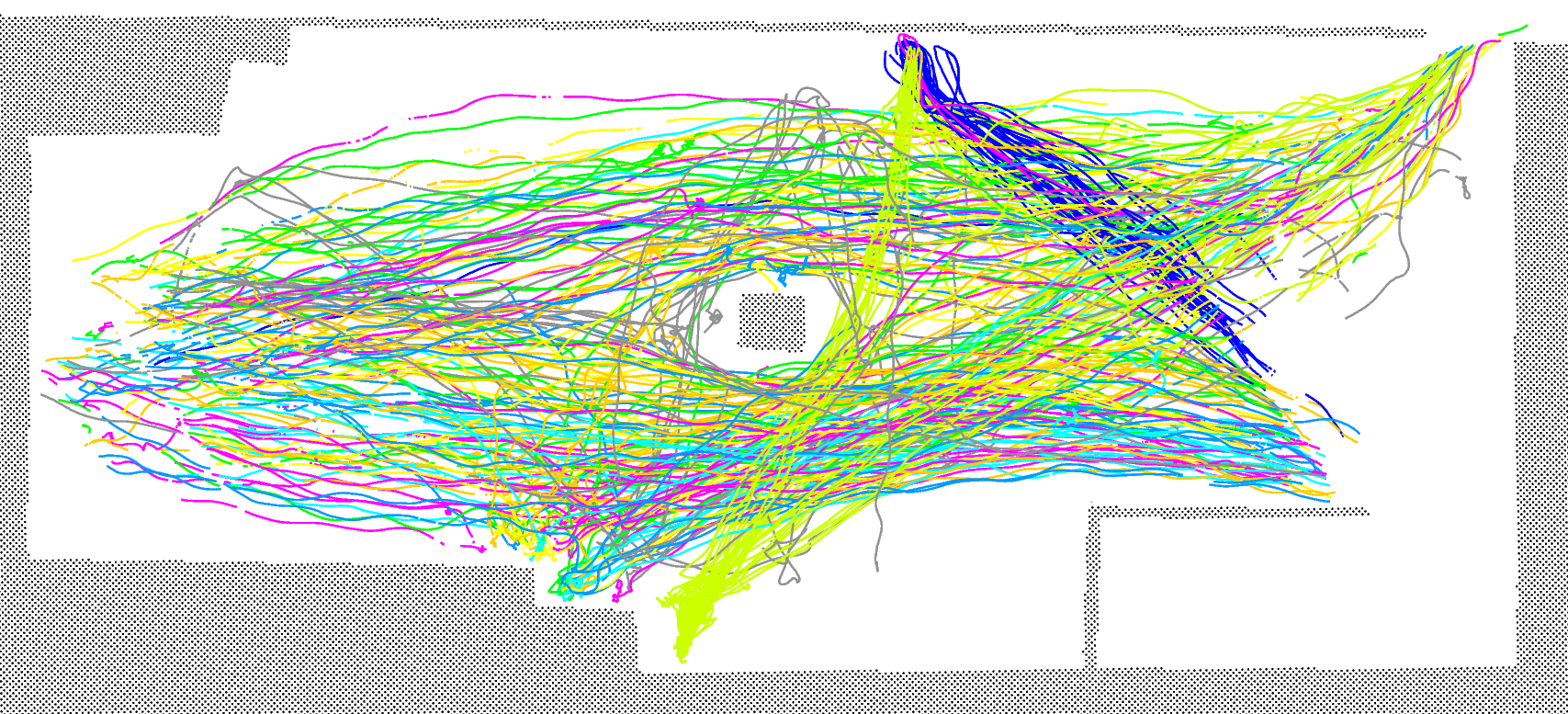

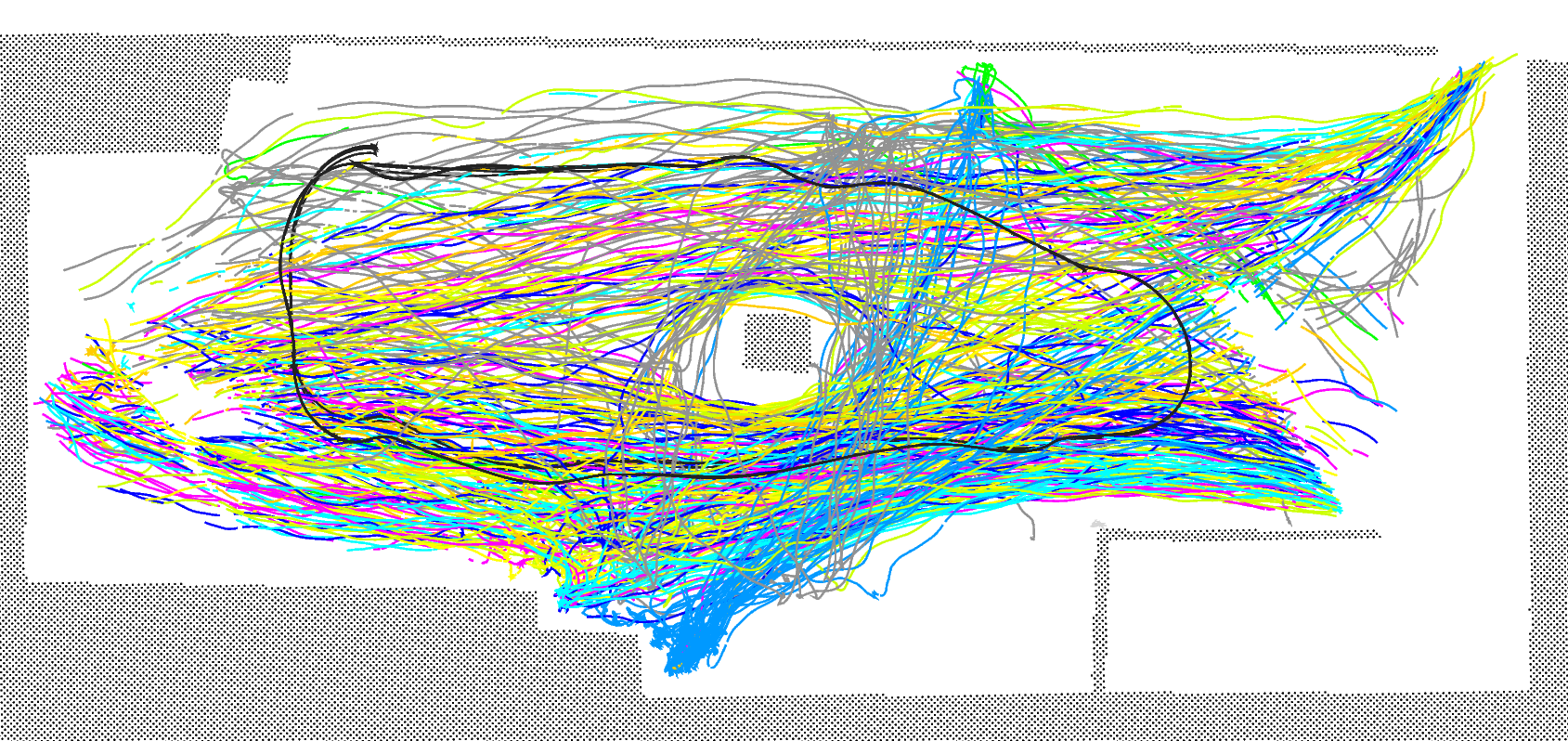

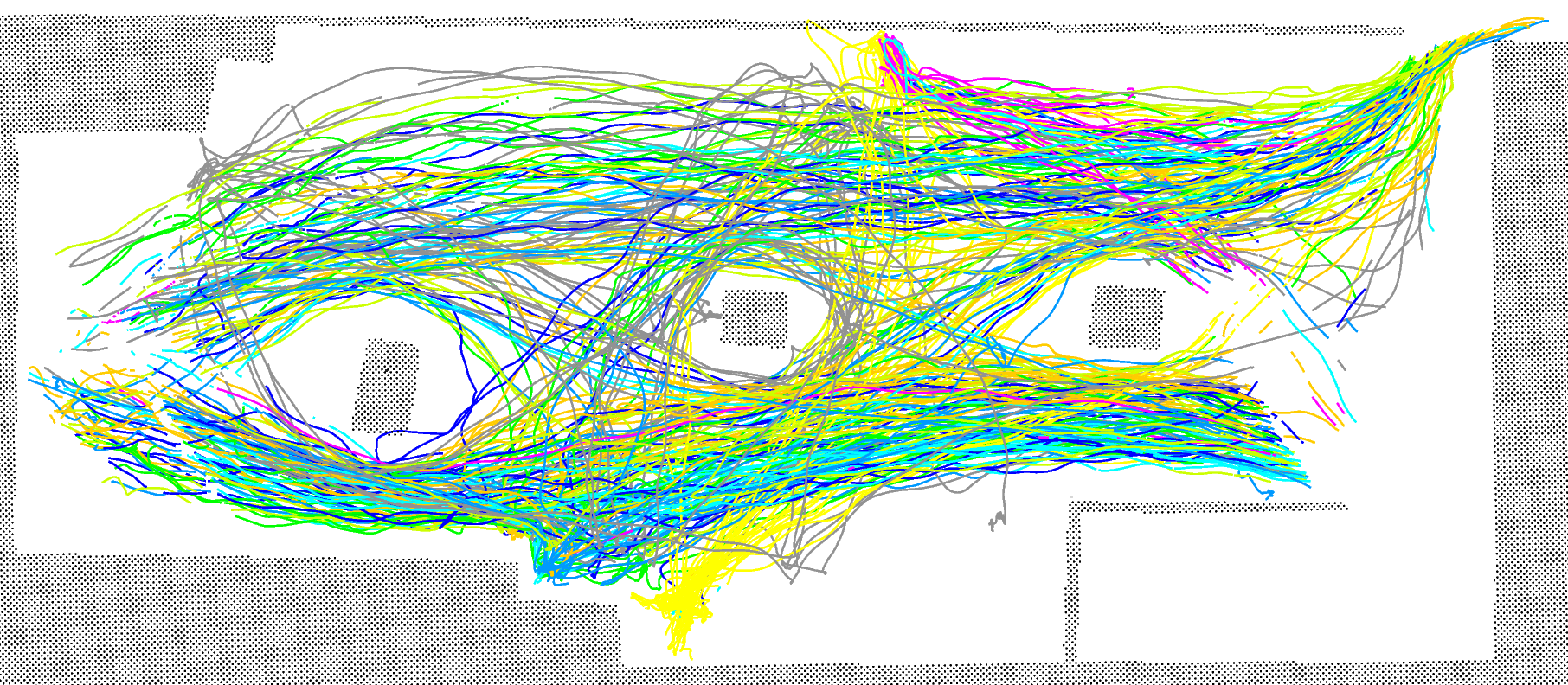

- High variety of human behavior and social interactions between individuals, groups of people and a mobile robot in presence of static obstacles

- Over 60 minutes of continuous motion tracking

- Over 600 individual and group trajectories between multiple goal points

- Accurate data for position and 3D head orientation, recorded with a motion capture system at 100 Hz

- Eye gaze data at 50 Hz with the gaze-overlaid first-person video at 25 Hz

- 3D LiDAR scans from a stationary sensor

- Video recording from a static camera

- Map of static obstacles, goal coordinates, grouping information

- Several recordings with varying obstacles and motion of the robot

Updates

- 11.09.19 The THÖR dataset is published online